Tree House Humane Society: UX Architecture Redesign of a Mobile Website

My Role

This project was completed during an Information Architecture class in the HCI Master of Science program at DePaul University. I made the following contributions to this project:

Recruited participants for Card Sort Testing, IA Testing and First-Click Testing

Moderated two remote virtual Card Sort sessions

Analyzed portions of the original website content inventory

Programmed IA Test and First-Click Tests

Created First-Click Testing wireframes for the task: “find the online magazine to read an article”

Wrote the results and analysis for Card Sort Testing and IA Testing

Wrote annotations for portions of the sitemap

Wrote the “future directions” and portions of “lessons learned”

Overview

Treehouse Humane Society is a Chicago-based no-kill shelter dedicated to the temporary and long term care and placement of stray cats. The organization offers a variety of services, including veterinary care, animal educational classes, and fostering and adoption programs.

Our three person team reviewed the Treehouse Humane Society’s website, and noticed several issues that could lead to user confusion and a potential decrease in conversion rates for adoptions, donations, and volunteering applications. The primary issues we found were:

Unclear navigation labels

Confusing organizational structure that buried important information

Inaccessible Call-to-Action links

A cluttered homepage with repetitive and unnecessary content and navigation

Based on our initial observations of the Treehouse Human Society website, we created a 9- week research and testing plan. A combination of Hybrid Card Sort Testing, IA Testing, and First-Click Testing were implemented to investigate the issues we uncovered. Multiple testing types and rounds provided our team with greater insight into potentially challenging areas, and allowed us to create recommendations for a website redesign.

We used the following digital tools throughout the project:

Optimal Workshop for Virtual Card Sorting, IA Testing, and First-Click Testing

Zoom with screen sharing for moderated Virtual Card Sorting

Axure PR for wire framing key screens

LucidChart for graph and sitemap design

Defining Areas of Focus

Problem Statement

Misleading navigation labels and an unclear organizational structure make locating information on the Treehouse website unnecessarily time consuming and difficult for users. How can we make content more accessible to users, to increase website use?

User Profiles

We used our initial review of the website content to create two user profiles that represent potential users of the Treehouse website. One user had the primary goal of adopting a cat from Treehouse, and the other was focused on volunteering with the organization.

Objective

Overall, our objective was to assess the Tree House Humane Society website’s information architecture to uncover and correct major usability errors and improve the overall website usability.

Key Tasks

We structured our key tasks around our personas’ main goals on the Treehouse website. Focusing on key user tasks allowed us to target the experiences of users with common goals, similar to those of Dina and Alexis. We defined one primary task for each user, and listed the steps needed to complete them.

Key User Task (based on user profile 1): Find out which cats are available for adoption at Treehouse.

Steps to Complete the Task:

Key User Task (based on user profile 2): Compare the different volunteer programs at Treehouse.

Steps to Complete the Task:

Content Inventory

After researching and defining the problem and organization, we analyzed the amount and type of content on Treehouse Humane Society’s website. This gave our team an overview of the website’s content and structure, before we began implementing changes. We used an excel sheet to code all main navigation and content items.

This is what we learned from conducting the content inventory:

Navigation consisted of 4 levels, which buried some content that could be moved higher up in navigation to make it more accessible to users.

Many key call-to-action items and links were buried within multiple nested pages. Specifically, all of the information on volunteer programs were buried three navigation levels deep (Engage > Volunteer > Volunteer Programs). This requires users to put in more work into finding information and increases the likelihood of users getting lost on the site, distracted, or frustrated and leaving the website without completing tasks.

We catalogued 92 different content items throughout the multiple navigation levels, and concluded that some of this content could be consolidated to declutter the website.

The Treehouse website takes up a lot of screen space with redundant links. Specifically, the website footer essentially features an entire sitemap. Many links in multiple locations take up valuable screen space, and require users to wade through unnecessary information to complete simple tasks.

Vague navigation labels make it difficult to understand what content is featured where, without looking at the actual content.

The Treehouse website features a wide range of services, programs, events, and educational information. Different content types are currently mixed together, with no clear distinctions or organization. This lack of organization makes it difficult for users to know what to expect when searching for specific information, or how to navigate the website most efficiently.

Testing

Over the course of nine weeks, we conducted multiple rounds of testing on our restructured Treehouse Animals website navigation and content. We began the process with two rounds of virtual Hybrid Card Sorts. We then completed three rounds of IA Testing for five tasks. We finalized testing with one First Click test that covered three tasks.

Card Sort Testing Round One

Method & Objectives

We began our testing process with a moderated virtual Hybrid Card Sort, utilizing Optimal Sort and screen sharing through Zoom. We recruited 6 participants who were cat or dog owners, through word of mouth. Participants were asked to organize 34 cards that represented second level navigation items from the Treehouse Humane Society’s website, and place them under the following 10 categories:

The cards we used represented items we felt were most important to include in our navigation, based on our evaluation of the content inventory, and the tasks we created for our personas. Some card and category names were modified from those in the original content inventory, to make content more easily accessible and eliminate unnecessary third and fourth level navigation.

After the Card Sort was completed, we asked each participant a series of questions, to gain additional insight into the choices they made during the card sort. We adjusted the questions we asked, based on their relevance to the participant’s results.

Results & Analysis

During the first Card Sort, the Catalyst Magazine, Cat Intake and Blog cards presented the greatest range of categorizations, and were split into 5 and 4 categories respectively. This indicated that participants felt these cards could be placed in multiple categories, or they were unclear on the meaning or context of the cards.

Three participants created new categories, including Events, Media and Socials. 100% agreement was only reached in the Foster category, followed by 92% agreement in the Employment category. Cat Health had the lowest agreement rating, at 40%. The average agreement rating was 67%.

Participants disagreed on the categorization of 50% of the cards:

Adoption Hours

Adoption Programs

Blog

Business Partnerships

Cat Intake

Catalyst Magazine

Emergency Vets

Feral Cat Services

Food Pantry

Fundraising Events

Internships

Meet the Staff

Press

Shelter Practices

Sponsorship

Treehouse History

Veterinary Care

We learned that unfamiliar words and lack of context were barriers to agreement amongst participants, and decided to refine card names for our next Card Sort.

Card Sort Testing Round Two

Method & Objectives

We conducted an unmoderated virtual Hybrid Card Sort with Optimal Sort, for our second round of Card Sort Testing. We recruited 16 participants through word of mouth and distribution of the card sort link to graduate students in the HCI program. Participants were asked to organize 32 cards that represented second level navigation items from the Treehouse Humane Society’s website, and place them under the following 9 categories:

Based on the results of our first card sort, we combined Adopt and Foster into one category. We also updated the names of several cards, to make the language more understandable. We changed Treehouse History to Our Story, Business Partnerships to Our Business Partners, Food Pantry to Food Pantry Fund and Cat Intake to Shelter Admissions.

The goal of this Card Sort was to see how the changes we made to the category and card names impacted participant agreement. We analyzed the results to build our first IA Test.

Results & Analysis

During the second Card Sort, the Educational Programs, Fundraising Events, Lost Cat Help, Shelter Admissions, Shelter Practices, Sponsor a Cat and Blog cards presented the greatest diversity in categorization. The highest agreement reached was 74% in the Donate category. Volunteer had the lowest agreement rating, at 19%. The average agreement rating was 39%. Participants disagreed on the categorization of all 32 cards.

The most challenging aspect of this Card Sort was that 5 new cards emerged with categorization across 5 or 4 categories, including Educational Programs, Fundraising Events, Lost Cat Help, Shelter Practices, and Sponsor a Cat cards. Shelter Admissions and Blog also showed categorization diversity in 5 and 4 categories, respectively, as they did in the first Card Sort. The dramatic differences between our first and second Card Sorts made it difficult to pinpoint the changes we should implement in our IA Testing.

IA Testing Round One

Method & Objectives

We conducted our first round of IA Testing using Optimal TreeJack. We recruited 7 participants through word of mouth and distribution of the card sort link to other graduate students in the HCI program. We asked participants to complete 5 tasks focused on adopting, volunteering, reading the online magazine, taking a cat to the shelter, and making a vet appointment.

Our goal with the first IA Test was to see how successful participants were in completing the tasks we provided.

Results & Analysis

Task 1: Find cats that are available for adoption.

6 of 7 participants successfully completed this task, and all navigated directly to Adoption & Fostering > Adoptable Cats.

Task 2: Find a volunteer opportunity.

All 7 participants successfully completed this task, and 6 of 7 participants navigated directly to Volunteer Programs and selected 1 of the 3 programs.

Task 3: Read an article in the Treehouse online magazine.

1 of 7 participants successfully completed this task, and navigated directly to About Us > Media > Publications. Based on the low success rate, we decided the original task scenario was too long and confusing for participants, and decided to simplify it for the next round of IA testing.

Task 4: Find out if Treehouse will accept the sick cat you found, and cannot keep.

2 of 7 participants successfully completed this task, but both navigated indirectly to About Us > Our Shelter Practices > Shelter Admissions. 4 of 7 participants began their search to accomplish this task at Services, instead of About Us, so we felt it was worth considering changing the navigation to better match the mental model of the majority of our participants. Based on the low success rate, we decided the original task scenario was still too long and confusing, and needed to be simplified for the next round of IA Testing.

Task 5: Make an appointment with the vet at Treehouse.

5 of 7 participants successfully completed this task. 1 participant navigated directly to Services > Veterinary Care, and the other 4 participants navigated indirectly. 6 of 7 participants began their search to accomplish this task at Cat Health, instead of Services. We felt it was worth considering changing the navigation to better match the mental model of the majority of our participants.

Based on the lower success rates for the tasks of reading a magazine article and taking a cat that was found to the shelter, we decided that we needed to simplify our tasks and change some of the verbiage for our next round of IA Testing. We also considered reorganizing the navigation of some menu items, based on the paths participants chose when trying to complete these two tasks.

IA Testing Round Two

Method & Objectives

We conducted our second round of IA Testing using TreeJack. We recruited 10 participants through word of mouth and distribution of the card sort link to graduate students in the HCI program. We asked participants to complete the same 5 tasks used in the first IA Test. These focused on adopting, volunteering, reading the online magazine, taking a cat to the shelter, and making a vet appointment (see Appendix Item 7 for full complete tasks).

We implemented the following changes in round 2 of IA Testing:

We simplified the tasks of reading a magazine article and taking a cat to the shelter.

We moved Shelter Admissions under the first level Services navigation.

We moved Veterinary Care under the first level Cat Health navigation.

Our primary goal with this IA Test was to gain additional data on the tasks that we updated, since they presented the greatest challenges for our participants during the first round of testing.

Results & Analysis

Task 1: Find cats that are available for adoption.

9 of 10 participants successfully completed this task, and 8 of 9 participants navigated directly to Adoption & Fostering > Adoptable Cats.

Task 2: Find a volunteer opportunity.

9 of 10 participants successfully completed this task, and 8 of 9 participants navigated directly to Volunteer Programs and selected 1 of the 3 programs.

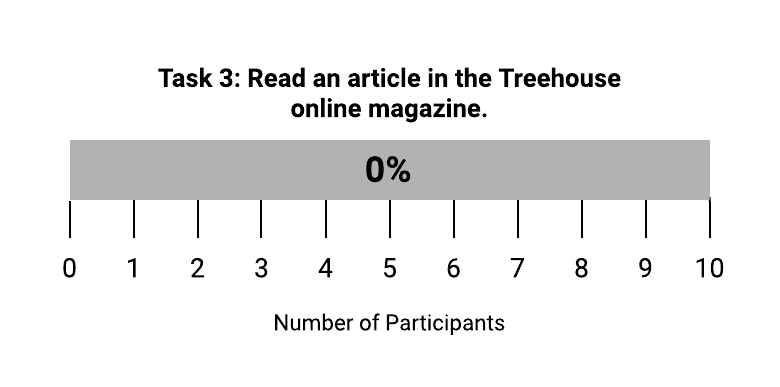

Task 3: Read an article in the Treehouse online magazine.

None of the 10 participants successfully completed this task. All failed to navigate to About Us > Media > Publications. Based on the drop in success from the first round of IA Testing, we decided the original task scenario was still confusing for participants. We also questioned whether Media was the correct navigation label.

Task 4: Find out if Treehouse will accept the sick cat you found, and cannot keep.

2 of 10 participants successfully completed this task, and only 1 of these participants navigated directly to Services > Shelter Admissions. Based on the low success rate, we decided the original task scenario was too long and needed to be reworded so that task goal was more clear to participants.

Task 5: Make an appointment with the vet at Treehouse.

7 of 10 participants successfully completed this task. 5 participants navigated directly to Cat Health > Veterinary Care, and the other 2 participants navigated indirectly.

The success rates were already low in the first round of IA testing, for the tasks of reading a magazine article and taking a cat that was found to the shelter. We were surprised and disappointed that the success rates for these tasks dropped even further in our second round of IA Testing. We felt that we had too many unanswered questions around these two tasks, and a third round of IA testing was necessary before we could move on to creating a new sitemap.

IA Testing Round Three

Method & Objectives

We conducted our third round of IA Testing using TreeJack. We recruited 5 participants through word of mouth. We asked participants to complete the same 5 tasks used in the first two IA tests. These focused on adopting, volunteering, reading the online magazine, taking a cat to the shelter, and making a vet appointment (see Appendix Item 7 for full complete tasks).

Our primary goal for the third round of IA Testing, was to see if the changes we made based on the results of the second round of IA Testing, increased participant success. We were most concerned with the tasks of finding a magazine article to read and taking a cat to the shelter.

We implemented the following changes in round 3 of IA Testing:

We changed the second level Media navigation to News, because we thought this label would be more understandable for participants.

We moved Shelter Admissions back under the About Us navigation, as a second level navigation item, rather than a third level navigation item. We thought this would make it easier for participants to find.

Results & Analysis

Task 1: Find cats that are available for adoption.

All 5 participants successfully completed this task, and all navigated directly to Adoption & Fostering > Adoptable Cats.

Task 2: Find a volunteer opportunity.

All 5 participants successfully completed this task, and 4 of 5 participants navigated directly to Volunteer Programs and selected 1 of the 3 programs.

Task 3: Read an article in the Treehouse online magazine.

2 of 5 participants successfully completed this task, but both navigated indirectly to About Us > News > Magazine. Though this task was only accomplished by 2 of the 5 participants, we felt the restructured navigation was a positive change. We believed the lack of context around the magazine made this task more challenging, and discussed including this our first click testing for further clarity.

Task 4: Find out if Treehouse will accept the sick cat you found, and cannot keep.

None of the participants successfully completed this task by navigating to About Us > Shelter Admissions. 4 of 5 participants began their search to accomplish this task at Adoption & Fostering, instead of About US. This led us to consider changing the navigation to better match the mental model of the majority of our participants.

Task 5: Make an appointment with the vet at Treehouse.

4 of 5 participants successfully completed this task. Half of participants navigated directly to Cat Health > Veterinary Care, and the other half navigated indirectly.

Though our success rates for the tasks of reading a magazine article and taking a cat to the shelter were lower than we anticipated, round 3 of IA Testing was beneficial. By examining the paths that participants took to complete the task of taking a cat to the shelter, we felt prepared enough to move forward with a new sitemap. The increased success rate from 0% in round 2 to 40% in round 3 of IA Testing for the task of finding a magazine article, also increased our confidence. Ideally, we would have preferred 10 to 15 participants for this round of IA Testing, and higher success rates for some tasks. Because we had not originally built a third round of IA Testing into our timeline, and had not anticipated such low success rates in round 2, we were too tight on time to recruit more participants.

Sitemap Redesign

During the initial content inventory process, we identified the need to relocate and rename many of the menu navigation items. After two rounds of Card Sorts, we created a rough revised sitemap. We further refined and annotated this sitemap after we completed our IA Testing. We reduced the number of navigation levels from the original four levels, to three.

We reworded many of the website’s original navigation item labels, to make their content more clear. We changed:

Leadership to Our Staff

Intake Criteria to Shelter Admissions

Ways to Give to Donations

Humane Educational to Educational Programs

During this process, one of our major challenges, was redistributing content that was originally housed under the Resources navigation label. This contained a variety of unrelated second and third level navigation items, including Programs, Emergency Vets, Progressive Shelter Practices, Caring for Cats and Lost Cats.

Wireframing

We focused on the tasks our participants found most difficult in IA Testing, and sketched main task flow screens for these, then wireframed them in Axure. We developed screens for these tasks:

Task 1: Find the online magazine to read an article

Scenario: “You want to read an article in the Treehouse Humane Society's online magazine. Where would you look for this?”

Task 2: Make a veterinary care appointment

Scenario: “You find a cat that you cannot keep, and you want to know if the Treehouse Humane Society will accept the cat. Where would you look for this?”

Task 3: Find the shelter admissions protocol

Scenario: “The cat you adopted from the Treehouse Humane Society last year isn't feeling well. You want to make an appointment with the vet at Treehouse Humane Society sometime this week. Where would you look for this?”

We developed a clear user flow across the wireframe screens, to accomplish each of the three tasks. All tasks started from the Home Page, which contained multiple points of entry to the website content.

First Click Testing

Methods & Objectives

The First Click Testing was the final stage in our testing process. Our goal was to further test the tasks we still had concerns about after our final IA Testing. We recruited a total of 10 participants through personal connections. Our team identified three possible paths to complete each task, including a direct link button to the specific content, the search bar and the hamburger menu.

During the First Click Testing, participants were prompted to complete three separate tasks:

Find the online magazine to read an article

Make a veterinary care appointment

Find the shelter admissions protocol.

Results & Analysis

Task 1: Find the online magazine to read an article:

8 of 10 participants successfully completed this task. All successful participants clicked directly on the “Magazine” link in the carousel. Of the two participants that failed to complete the task, one participant clicked on the “Blog” link, and the other clicked the “Next” arrow in the carousel. The average task completion time was 9.29 seconds. Results indicate that most users could easily locate the information they were searching for. Though task completion rates were low for this task throughout IA testing, these First Click Test results showed that providing more context and visual design significantly increased success rates.

Task 2: Make a veterinary care appointment

All 10 participants successfully completed this task. 7 participants clicked on the “Veterinary Care” photo button. The other 3 participants clicked on the “hamburger menu” icon. The average task completion time was 11.4 seconds. Results indicate that users could easily locate the information they were searching for.

Task 3: Find the shelter admissions protocol

All 10 participants successfully completed this task by clicking directly on the “Shelter Admissions” photo button, with an average task completion time of 19.07 seconds. Results indicate that users could locate the information they were searching for. Though task completion rates were low for this task throughout IA testing, these First Click Test results showed that providing more context and visual design significantly increased success rates.

Lessons Learned

Participants' mental models can vary greatly, and may be very different than ours.

Participants thought certain navigation items would be found under first level navigation items that we didn't initially consider. We adjusted our navigation based on the paths they chose in the IA tests, even when they did not successfully complete tasks. This showed us where they expected information to be located.

It’s difficult to anticipate test results, especially when different participants are used across tests.

Changes we thought would increase participant success in accomplishing tasks, based on test results, actually produced lower success rates. We had to look at the paths people chose to try to better understand whether these changes made sense, and decide how to move forward.

Clear and understandable language is critical for reliable testing results.

We initially made our tasks too long and detailed, which confused participants. We should have kept tasks short and straightforward, so participants were not distracted, and focused only on the tasks.

If there isn’t enough data to support making changes, conduct additional testing.

Though we didn’t originally include a third round of IA Testing in our schedule, we felt it was worth the effort of running an additional test to collect more data. This allowed us to identify actionable feedback and achieve higher rates of participant consensus to support our website changes.

Future Directions

Due to our limited time frame, we were only able to focus our testing efforts on a limited number of tasks. Because we made significant updates from the original website, including restructured navigation and renamed navigation items, we believe more testing is necessary. This will ensure that the changes we implemented will improve the overall usability of the website, and justify the time and expense of making these updates.

We would like to conduct additional rounds of IA and First Click Testing for the tasks of donating to the Treehouse Humane Society, and purchasing tickets to events. Because these are both important tasks that contribute to the fundraising efforts of the organization, they are a high priority. Ideally, we would like to create wireframes for all key tasks, and eventually develop an interactive prototype for further usability testing.